The Ethical Implications of Autonomous Vehicles

But what can go wrong? We’ll take a look on how people relate to AVs, we’ll assess the risks of self-driving cars through the looking-glass of ethics & immerse ourselves into an ethical design approach as a potential solution to everything we’re about to cover..

This second part of the article will also address some open questions related to the different levels of automation, which we unpacked in the previous article. However, step with caution towards the end! The article may leave you with a myriad thoughts on how to answer the big question marks and a burning desire to be address and solve the problems.

But before we get there, let’s look into the benefits of self-driving cars.

The Benefits of Self-Driving Cars

- Enhanced road safety and crash avoidance: less risky and reckless driving, no speeding over the limit, no drunk AI driving. Lowering number of deaths by reducing crashes by a staggering 90%;

- Reducing the costs of physical damage, the medical costs and vehicle repair costs as well. Let alone the psychological trauma of a car accident. Potentially lowering the costs of insurance. Stats show that enhanced road safety and crash avoidance lead to saving of roughly $190B / year (McKinsey);

- Increased mobility & accessibility: more independence for people with disabilities, elderly people and people from the underrepresented communities. They would benefit by the assisted driving provided by an AV of level 3 and up;

- Reducing congestion and traffic jams;

- Increased ride-sharing services – with level 4 & 5 of automation, there will be more ride-sharing models adopted;

- Increased quality of life – easier travel, time efficiency in the AV (if driving is no longer co-shared) etc.

Ethical Implications

Now that we have explored the benefits of self-driving cars, it’s high time we discovered the other side of the coin. And it only feels natural to start the conversation about ethics in AVs with the trolley dilemma. Since human error is the first and main factor in 94% of the cases in car accidents (!) (Autonomous Vehicles Factsheet – University of Michigan), self-driving cars will definitely help decrease the stats as we have seen above.

Right?

This is where we start thinking about what can go wrong.

Let’s dive into the mind-bending, intricate labyrinth of ethics, to explore how the different ethical frameworks may help us address the question and see what SAP’s findings reveal :

Exploring the Trolley Dilemma of AVs through Ethical Frameworks

Photo credit: Bernd Dittrich

Imagine the following scenario: you are driving your self-driving car (level of automation 4 & 5). There is another passenger in your car. Suddenly, you realize there is an imminent crash ahead with another car which has more passengers than yours. Potentially, the pedestrians may be affected as well. What do you expect your car to do in that situation?

Utilitarianism

Let’s start with utilitarianism, aka the end justifies the means. Utilitarianism is all about weighing the possible outcomes and taking the decision based on the action that would maximize the utility function: choosing the greater good. However, what greater good would the AI be programmed to choose? And whose more importantly?

Interestingly enough, under a utilitarian ethical framework, when faced with the “greater good” decision, 76% of the subjects leaned towards the greater good of all parties involved in the accident, rather than the greater good of only the driver & the AV’s passengers and then of the other people’s (which was prioritized only by 48% of the subjects). So having the AV minimize the loss of all lives of the people involved in a potential accident was the prevailing choice.

Other significant & opposite answers illustrate 1) a preference ranking established by the driver which the AV will base its decision upon (i.e., greater good becomes only the good of the driver and the passengers within his/her car. Greater good can be: saving the females, children, innocent people – pedestrians etc.) and 2) the AV prioritizing the greater good without any input option of the driver.

Deontology

Deontology is governed by normative rules that have an unchallengeable, inviolable characteristic to them, which can never be overridden by any other principle, circumstance or situation. The action is more important than the outcome, unlike in Utilitarianism. If we follow a deontological approach, the AV would treat everyone equally and would not make any difference between the driver, the passengers, the people in the other car etc. The AV would follow its set of rules based on human rights and other universal values from its decision space, despite the outcome of the accident. The shortcoming of deontology is that the actions of the AV will never be changed situationally, nor circumstantially through this lens. They won’t be adapted to the final outcome, nor needed to be explained as the universal values are unchallengeable and untouchable.

Relativism

Ethical Relativism is a different animal. Its foundation lies in considering that ethics are a cultural product. Hence everyone’s ethical values and way of being are valid and morally acceptable if this is how things are done in the community at a specific time. As a result, ethical conduct is defined by society’s norms and it varies across communities.

Even if it promotes tolerance for everyone’s views, values and practices, it also promotes what we call – moral paralysis. So hypothetically, if drunk driving was the custom, it would be considered immoral to drive sober. But let’s ignore the moral paralysis for now…

A more accurate example of moral relativism in the trolley dilemma would be for the AVs to be developed according to cultural dimensions. So the AV could go for sparing the driver’s life if the people were from individualistic cultures (US, for instance); sparing the young if people were from long-term oriented cultures (like Japan); taking no action or any action for that matter, if people had external locus of control. So if they believe that everything has already been written, that their destiny cannot be changed and they do not have control over the accident in the first place.

SAP’s example of ethical relativism shows the majority’s agreement for having the car take a decision that would abide by what it is considered ethical to the specific society it belongs to. Other opinions include that whatever decision the car takes it should be tolerated and cannot be judged or contested outside the specific society.

Absolutism

Absolutism / Monism is the opposite of Relativism and it is based on following whatever the person finds ethical in an absolute, unchallengeable way. Two people can be absolutists and have opposing views. They would find each other unethical. An absolutist approach in the AVs trolley dilemma is that all cars abide by the same moral rules and that the car would take the ethical decision based on the universal moral rules despite whether its owner agrees with it or not. This would definitely result in people having less acceptance towards technology and choose not to buy an AV, if in a critical situation the car would do the opposite of what the driver would want.

Ethical Pluralism

Ethical pluralism, or value pluralism, explores the depth and complexity of human beings being able to hold norms and values that are (or can be) incompatible, incommensurable (cannot be ranked) and be able to apply them differently, based on one’s own cultural context, traditions etc.

The trolley dilemma through a value pluralism perspective shows that the AV would need to abide by the current law of the state it is in and then take into account the owner’s morals for taking the decision. Other views include finetuning each value individually and have the car take its decision based on that; human rights should be at the core and the car should do whatever has the best outcome or the least damaging one for society; the decision may differ across cultures, but there should be some moral values selected at a wider, global scale the car should always respect.

All these ethical frameworks act as different windows of thought and show how intricate it is to think out a system which has real life consequences and can work successfully in a culturally diverse world.

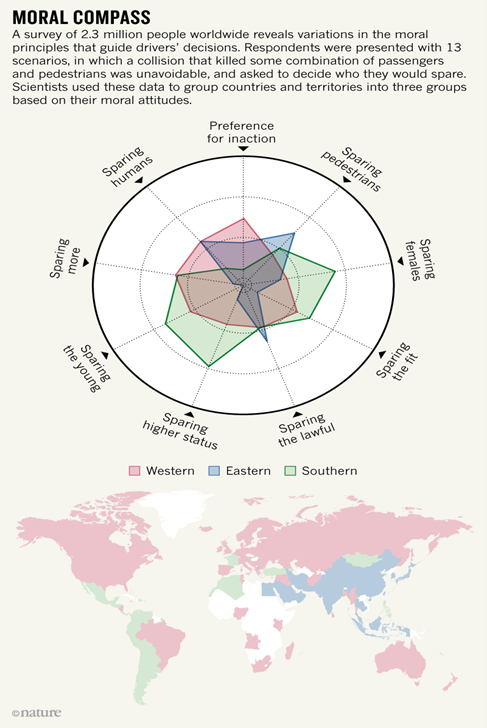

A study illustrating the moral map for AVs was conducted by Nature with 2.3M participants and conveys how culturally diverse one’s thinking paradigm, set of beliefs, actions, behaviour and morality are.

Photo credit: Nature. More on the study here

Is there any right answer we can come up with after being caught in the weeds of the trolley dilemma for AVs? Is there still hope that we can engineer a system for our AVs that will minimize damage and respect people’s ethical considerations, no matter how varied, diverse and opposing they are? Maybe there is.

Value Sensitive Design

Photo credit: Jenny Ueberberg

So what is this gleaming hope we’re talking about?

Value Sensitive Design is an approach which places human values at the core of the design process and has as an aim the value alignment between all the stakeholders of a product, creating an inclusive dialogue between them and potentially addressing different issues that arise, such as value conflicts generated by opposing values.

How does Value Sensitive Design work?

It employs a methodology that consists of 3 type investigations: conceptual, empirical & technical. The methodology is iterative and integrative.

Value Sensitive Design uses an interactional stance related to how values are perceived and employed in design. If tech use is dependent on the user’s goals and motivations, its features or properties by design can only support specific values and inhibit others.

An Exercise in Value Sensitive Design

A value sensitive design approach would start with one of the core aspects, such as AV safety for instance & its context of use : AV decision making in critical situations.

As a second step, direct & indirect stakeholders would be identified. In this case direct stakeholders would be tech makers, AV manufacturers, drivers, passengers, law makers, auditors, subject matter experts, everyone in the supply chain (from data-labeler to marketer) etc. Indirect stakeholders would be pedestrians.

Are all of these people’s interests represented and answered? What are the benefits and harms for each group of stakeholders above? Once these are identified, values that answer to these benefits, harms, interests and needs can be mapped out and a conceptual investigation of each can be ran. If any value conflict arises, the imbalance can be mitigated or discussed among the stakeholders (trolley dilemma – ethical pluralism example).

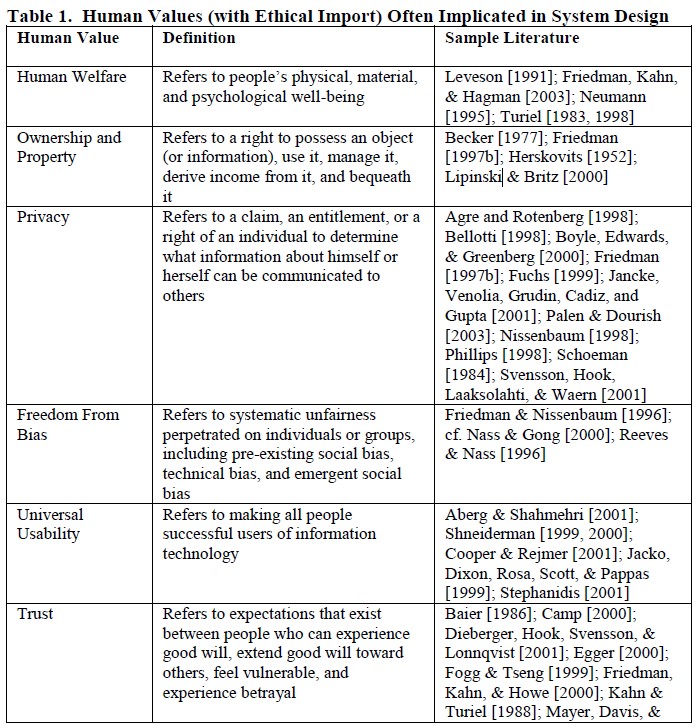

Iterations can always be made throughout the process, while building the system. Here is a table of potential human values frequently used in Value Sensitive Design:

Chart Credit to Batya Friedman, Peter H. Kahn, Alan Borning, Ping Zhang – Value Sensitive Design and Information Systems

For pursuing the exercise of Value Sensitive Design until the end and doing the value alignment once the values for each stakeholders have been identified, join us on our Discord channel, or become a contributor to our Data Passport project.

See how far we’ve got and get the opportunity to collaborate with members of Open Ethics & other like-minded contributors to help bring solutions to complex problems and help humans and AI successfully work together.

Other Ethical Considerations & Risks related to Levels 4 & 5

Photo Credit: Brandon Shaw

Unfortunately, the ethical considerations branch out far beyond the trolley dilemma and more than the grandfather would imagine especially for the last two levels of automation.

Levels 4 & 5 of automation are not required to disallow for human driving. This means you may or may not be able to drive the vehicle unless the automaker chooses to add the function. There are questions coming up about you giving your driving rights to a friend who wants to commute from point A to point B using your car.

- Would he be considered a passenger? In case of an accident, who would be liable? Would we treat level 4 & 5 AVs as moral agents? Where do we stand on liability & agency? Does the car act as a moral extension or even proxy for us?

- From an accessibility perspective, self-driving cars may be a game-changer mobility-wise for people who are unable to drive themselves due to age, health reasons, disabilities etc. One concern that comes up is having the driving option for a level 4/ level 5 AV by design and sell the vehicles to people who would generally not be allowed to drive, or have them “drive” the AV of a friend. Would a car whose driver is a person who needs assisted driving be taken the driver’s right away, to ensure the person would never try to drive the car without the assisted function?

- Would children be allowed to ride in a level 5 AV without an adult, but with parental agreement?

- Would the cars no longer have pedals, a wheel, nor any driving controls by design? Would the configuration of a full AV be different than a normal vehicle? What would the implications be for a level 4 / level 5 AV not to include human driving even as an option?

- Can the car take a better decision than the driver?

- Can the driver override any default decisions of the car in a level 4 or 5? Can the driver be given back all control, or would the car have an emergency mode, where it would follow the driver’s commands despite speed-limit for instance?

- Do the people get to take the decision of what happens in an imminent accident before driving the car? Is it already taken (by-design)? Can people have multiple ethical alternatives? Is it an individual decision or is it better to design it with the decision already taken?

- Is data-poisoning real? Can we talk about safety and security by design? If so, to what extent?

- What do we do about bias?

- How do we even begin to address privacy?

- Would pedestrians need to adjust their behaviour to the new technology?

- How reliant are people becoming on technology?

- How does technology acceptance fall into play, especially with all the ethical considerations?

One article is definitely not enough to cover all these questions. However, there is a great opportunity for them to be addressed and hopefully before the technology of fully autonomous vehicles is deployed, especially as the self-driving car industry is paving the roads of the future and it is here to stay.

To conclude with an analogy, this is the first time in history when we see the sword of the warrior (the tool & the symbol of power) and the warrior himself (the decision-maker) fusion.

And if you feel brave enough to ask the hard questions and ensure the warriors are using their swords masterfully and ethically, come join our work and bring in your contribution here.

Featured Photo Credits: Jonas Leupe