Balancing Act: Navigating Safety and Efficiency in Human-in-the-Loop AI

Deploying AI in production brings some challenges and trade-offs between safety and efficiency. Humans are setting up, tuning, testing, and using the AI systems, providing feedback and guidance to the machines.

How can we ensure that the human input is reliable, ethical, and consistent? How can we balance the human effort and the machine autonomy? How can we design and evaluate the AI systems that are not only accurate, but also trustworthy, fair, and explainable? These are some of the questions that this article will explore, as we navigate the complex and dynamic landscape of human-in-the-loop (HITL) AI.

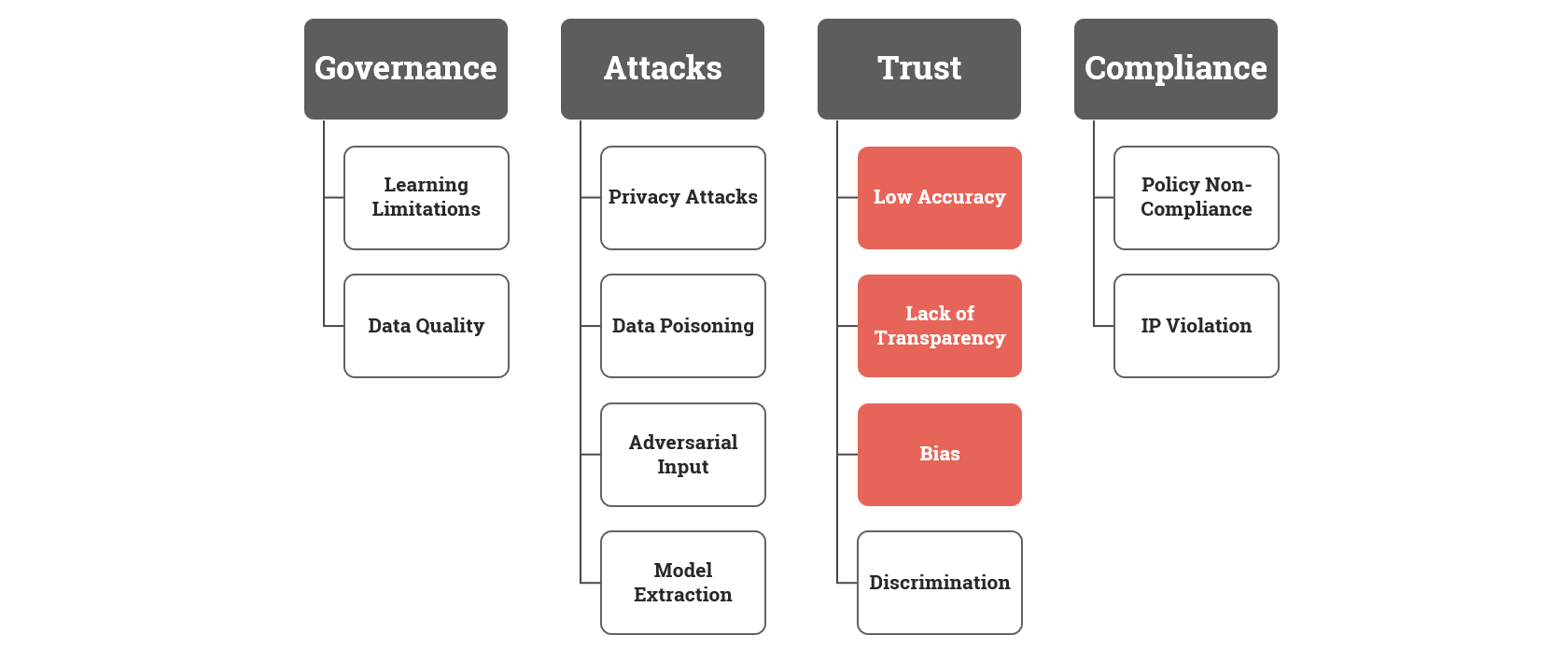

According to AIRS, which was initially developed for the financial industry, the potential risks of AI/ML deployment can be classified into four categories: data-related risks, AI/ML attacks, testing and trust, and compliance.

- Data-related risks include issues such as data quality, privacy, security, and bias.

- AI/ML attacks refer to malicious attempts to compromise, manipulate, or exploit the AI/ML systems or their outputs.

- Testing and trust involve challenges in verifying, validating, explaining, and auditing the AI/ML systems and their outcomes.

- Compliance relates to the legal and regulatory obligations and standards that apply to the use of AI/ML

There is a patchwork of solutions and best practices for mitigating the risks, such as establishing AI governance frameworks, enhancing transparency and explainability, adopting differential privacy and watermarking techniques, and monitoring and auditing sutonomous systems in the real-time.

The iterative nature

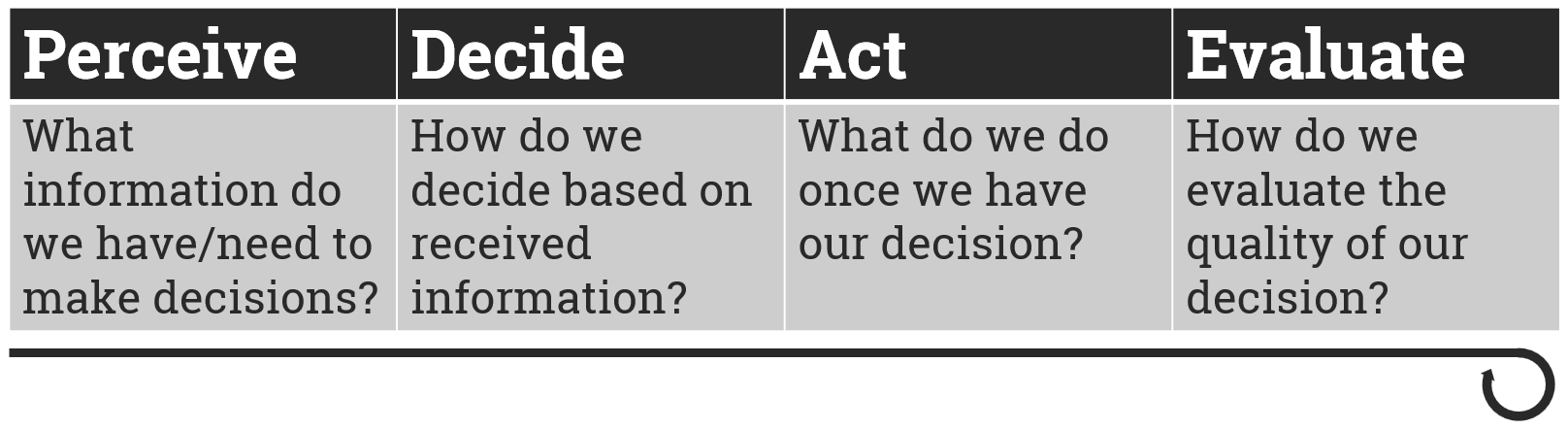

Building and deploying mission-critical AI models is a staged and iterative process that requires refinements on each stage – before and after a system starts facing a real world. Decision approaches should be challenged on training and inference. What does that mean exactly?

In the ever-evolving landscape of artificial intelligence, the concept of Human-in-the-Loop has emerged as a pivotal paradigm that bridges the gap between human expertise and machine learning capabilities. It embodies a collaborative approach where human intelligence and intuition are harnessed alongside AI algorithms, resulting in a powerful synergy. Human-in-the-Loop AI brings about transformative benefits in various domains, from enhancing the accuracy of machine learning models to accelerating the journey to desired quality of the output. Moreover, this approach not only empowers machines but also elevates human performance by making individuals more efficient in their tasks.

Human-in-the-loop (HITL) is a design pattern in AI that leverages both human and machine intelligence to bring meaningful automation scenarios into the real-world. The human-in-the-loop approach reframes an automation problem as a Human-Computer Interaction (HCI) design problem. With this approach AI systems are designed to augment or enhance the human capacity, serving as a tool to be exercised through human interaction.Open Ethics Taxonomy

Below we explore the multifaceted role of HITL approaches, delving into four scenarios where this symbiotic relationship between humans and machines is proving to be a game-changer: refining machine learning accuracy, expediting AI’s learning curve, enhancing human precision, and optimizing human efficiency.

- Making Machine Learning models more accurate: Human-in-the-Loop is instrumental in enhancing the accuracy of Machine Learning models by leveraging human expertise and intuition. In scenarios where AI algorithms may struggle due to complex or uncommon data patterns, humans can step in to validate, correct, and eventually label data points. This iterative process, combining human judgment with machine computation, helps fine-tune algorithms and ensure they produce more reliable and precise results, ultimately boosting the overall accuracy of the AI outputs.

- Getting Machine Learning to the desired accuracy faster: Human-in-the-Loop expedites the journey to achieving the desired accuracy in Machine Learning models. Instead of relying solely on automated processes, humans can guide the AI systems in the right direction by providing timely feedback and adjusting model parameters. This interactive feedback loop significantly reduces the trial-and-error period, allowing AI to reach the desired level of accuracy more swiftly, saving time and resources.

- Making humans more accurate: Human-in-the-Loop can also enhance human performance by pairing them with AI systems. In contexts where precision and consistency are paramount, AI can assist humans in tasks such as medical diagnoses, data analysis, or language translation. By aiding humans in making more accurate decisions and reducing errors, AI can be a valuable tool for augmenting human capabilities, especially in domains where accuracy and sustainability is crucial.

- Making humans more efficient: Human-in-the-Loop AI can streamline processes by automating repetitive and time-consuming tasks. AI can handle routine aspects of a task, leaving humans to focus on more complex and creative elements. This not only increases the overall productivity but also reduces the potential for human fatigue and errors. By offloading routine work to AI, organizations can make better use of human skills and cognitive abilities, leading to improved efficiency across various domains.

The main areas for looping human inputs

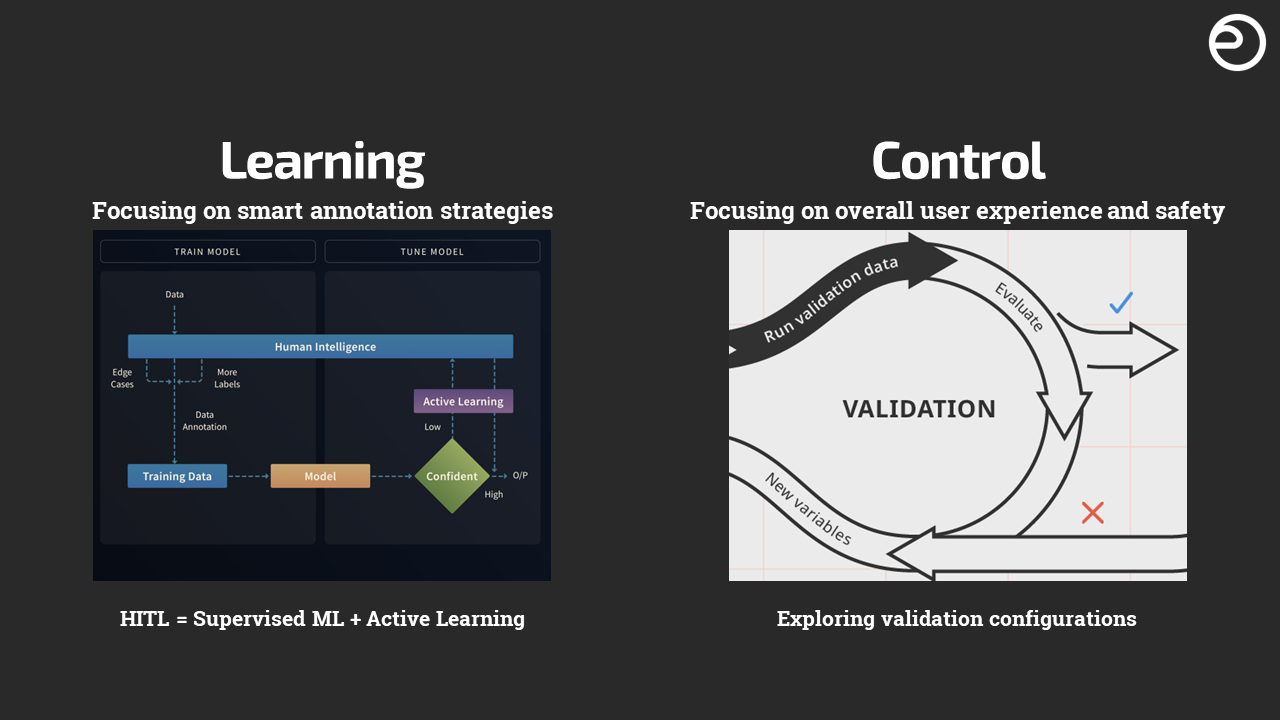

Involving humans to label data and to approve decisions serves different roles in the AI ecosystem. This is about learning and control. Data labeling contributes to the training and accuracy of AI models, while decision approval is focused on validating AI outcomes and aligning them with predefined standards and objectives. Both are critical aspects of Human-in-the-Loop AI, with distinct purposes and implications.

Two distinct aspects of Human-in-the-Loop AI, each serving different purposes in the machine learning and decision-making process.

Two distinct aspects of Human-in-the-Loop AI, each serving different purposes in the machine learning and decision-making process.

Here are the key differences:

Aspect | Data Labeling | Decision Approval |

Nature of Involvement | When humans are involved in data labeling, their primary role is to annotate or categorize data points. This process typically occurs during the training phase of machine learning models. Humans review data and assign relevant labels, helping the AI system learn and generalize from the labeled data. | In the context of decision approval, humans are engaged to assess and validate the decisions made by AI systems. This occurs after the AI has processed data or made predictions. Humans review the outcomes and provide approval or make adjustments to ensure the decisions align with desired criteria. |

Timing | Data Labeling typically occurs in the early stages of developing machine learning models. It’s a precursor to training AI algorithms and plays a crucial role in building accurate models. | Decision Approval happens after the AI has already made predictions or decisions. Humans step in to verify and validate the AI’s outputs. |

Error Correction | Errors in data labeling primarily pertain to the accuracy of labels assigned to the data. If mistakes are made during data labeling, they can affect the model’s learning process and its subsequent performance. | Errors in decision approval involve assessing whether the AI’s decisions align with the desired outcomes or criteria. Humans correct or validate these decisions to ensure that they meet specific quality standards or ethical guidelines. |

Role and Expertise | Data labelers need expertise in understanding the data and applying relevant labels accurately. They may not require in-depth knowledge of the AI model’s underlying algorithms. | Decision approvers often require domain expertise and an understanding of the AI system’s operation. They need to evaluate the decisions in the context of the specific application and consider ethical, legal, or domain-specific factors. |

Impact on AI | Data Labeling directly influences the AI model’s training and accuracy. High-quality data labeling is essential for building effective machine learning models. | Decision Approval doesn’t influence the AI model’s training but affects the quality and reliability of AI outputs. It ensures that AI decisions align with the intended goals and meet specific criteria. |

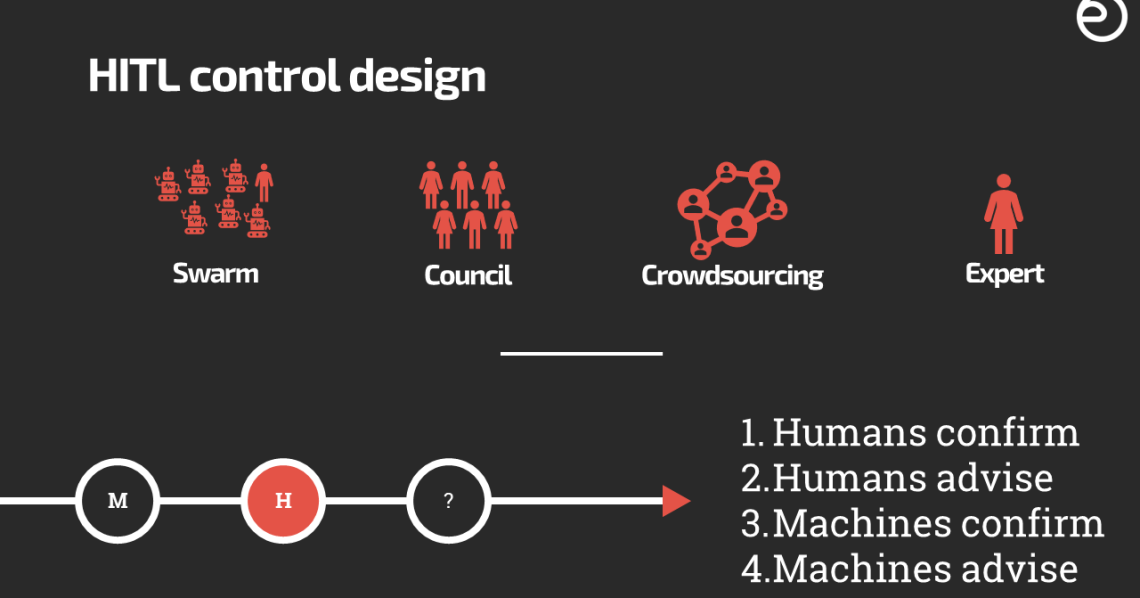

HITL control and interaction design

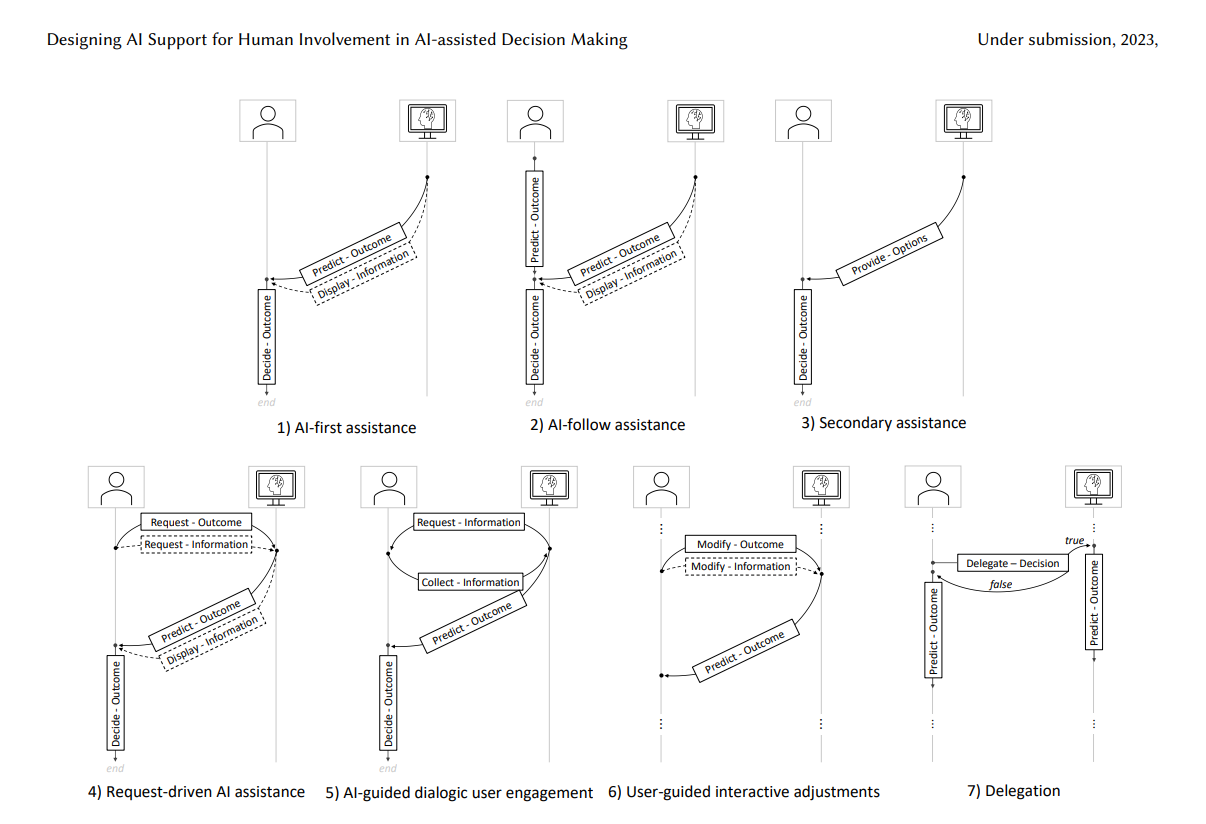

Numerous empirical investigations have examined interactions between humans and AI across various application domains, each characterized by a diverse range of interaction styles. However, a standardized taxonomy for describing these Human-AI interaction procedures has not yet been established. To bridge this knowledge gap, a group of researchers from JHU presented their findings from the comprehensive review of the literature on AI-assisted decision-making, introducing a taxonomy of interaction patterns, elucidating the various modes of interactivity between humans and AI.

Taxonomy of interaction patterns identified in AI-assisted decision making. Designing AI Support for Human Involvement in AI-assisted Decision Making: A Taxonomy of Human-AI Interactions from a Systematic Review. 2023, Catalina Gomez, Sue Min Cho, Shichang Ke, Chien-Ming Huang, Mathias Unberath

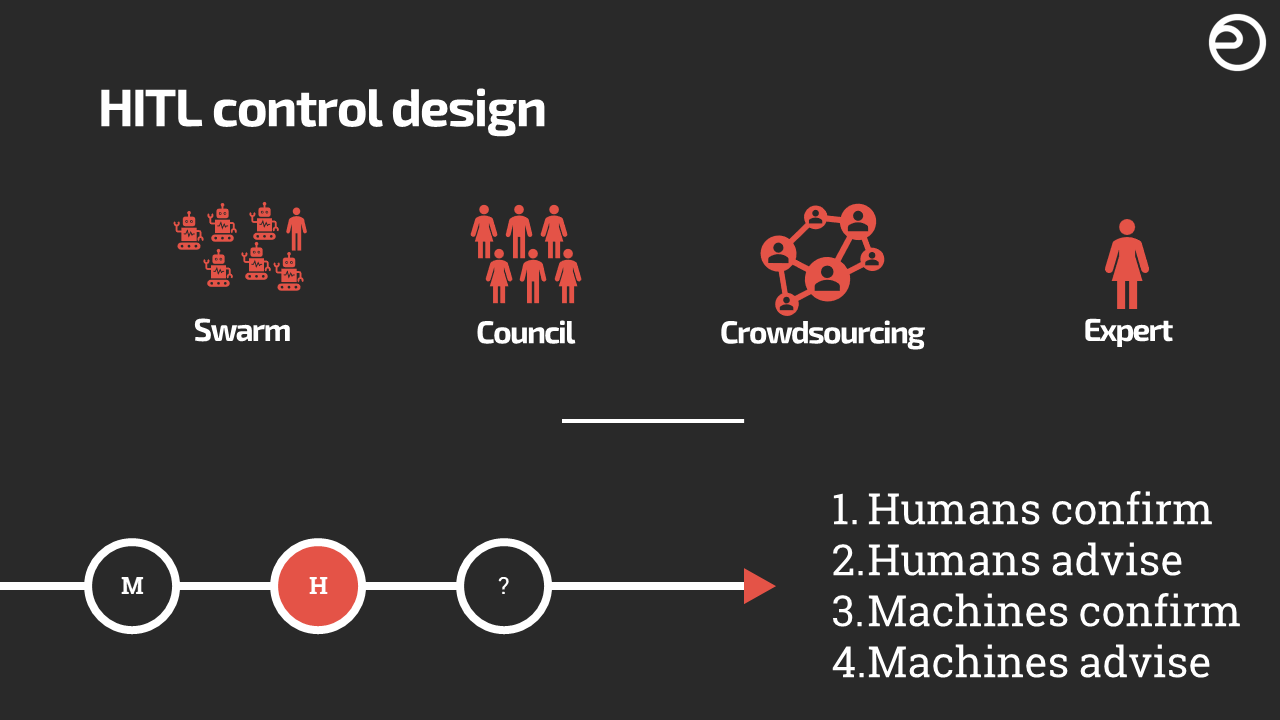

At Open Ethics we help engineers and leaders to understand the ways how HITL could be effectively implemented in the production environments. We argue that interactions could be reduced to a total of 4 key setups and 4 key pipeline configurations, the combination of which should be chosen based on the available resources, risks, and the deployment complexity.

There are a total of 16 distinct configurations when considering the various control and pipeline options for HITL implementation. Each combination represents a unique way in which humans and machines can be involved in the decision-making process within different pipeline structures, resulting in a diverse range of possible setups for Human-in-the-Loop AI systems.

Control Setup (Who?) | Pipeline Options (How?) |

1.Swarm 2.Council 3.Crowdsourced 4.Expert | 1.Humans confirm 2.Humans advise 3.Machines confirm 4.Machines advise |

HITL and Open Ethics Label

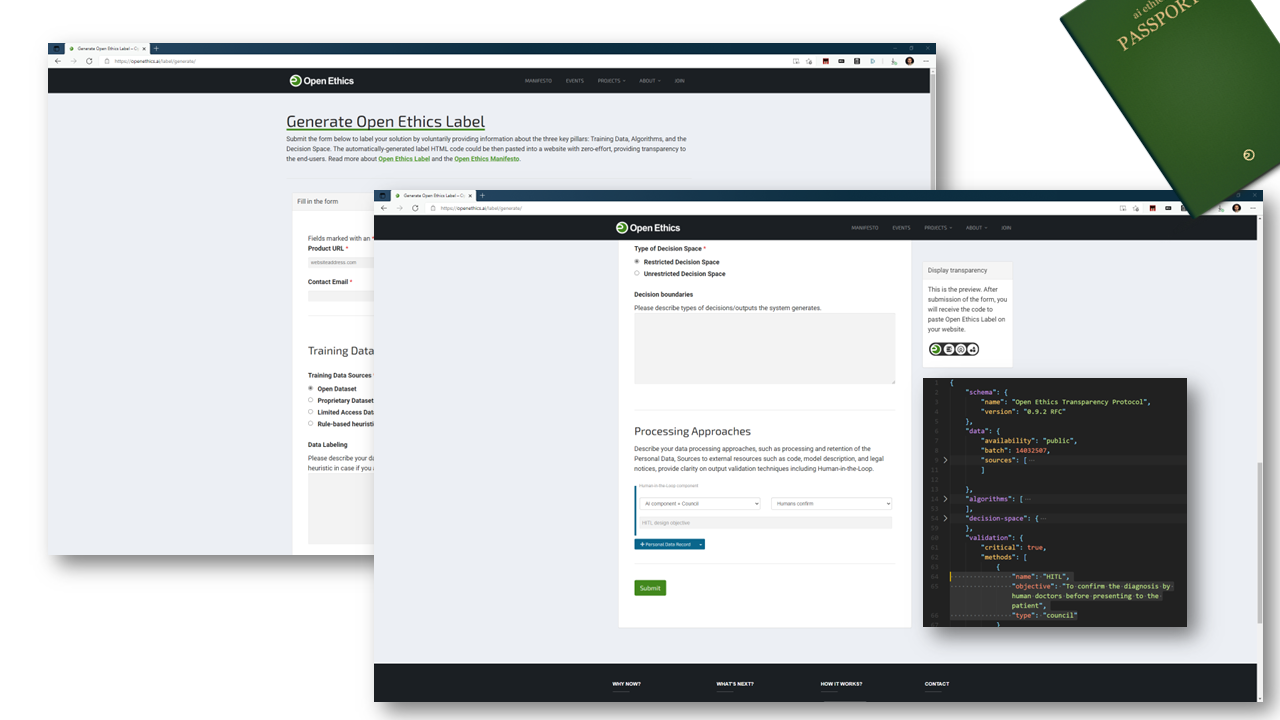

Disclosing and making transparent how Human-in-the-Loop is implemented is not only an ethical imperative but also a practical necessity. It ensures accountability, addresses ethical concerns, builds trust, and facilitates compliance with regulations. It ultimately leads to more responsible and trustworthy AI systems that benefit individuals and society as a whole.

We implemented this part in the Open Ethcis Label form to allow building accountable systems and provide tool for improvement by tracing back AI decisions to historical HITL configurations with the Open Ethics Label

Useful reading and risk considerations

Effective redundancy for Safety

Incorporate human redundancy structures, active and standby human redundancy, duplication and overlap of functions, and cognitive diversity.

Human redundancy in complex, hazardous systems: A theoretical framework/David M. Clarke

Mitigating Human Annotation Errors

Human ability to effectively label data depends on the learning sequence. Quality can be improved by local changes in the instance ordering provided to the annotators

Cyber Physical Systems and HITL

HITL concept exhibits limitations due to the different natures of the systems involved. It’s proposed that besides human feedback loop, the Bio-CPS validation is needed.

Going beyond validation of output

Human’s role is elevated from simply evaluating model predictions to interpreting and even updating the model logic. Providing explainable or “middleware” solutions to validators increase quality.