A (more) visual guide to the proposed EU Artificial Intelligence Act

What are the main components of the proposal and how to understand the forthcoming legal framework?

On April 21, 2021, the European Commission published its Proposal for a Regulation on Artificial Intelligence. The European Commission’s legislative proposal for an Artificial Intelligence Act is the first initiative, worldwide, that provides a legal framework for AI.

This post is aimed to give clarity to the main components of the proposal and aid in the understanding of the forthcoming legal framework.

What is it about?

This Regulation lays down rules for the deployment and use of artificial intelligence systems in the EU, including prohibitions of certain artificial intelligence practices, requirements, and obligations for operators of “high-risk” AI systems, as well as the approach to AI market monitoring and surveillance.

To have an idea about the content of this regulatory proposal, have a look at the phrase frequency distribution.

EU AI Act phrase frequency distribution

I removed some words that don’t convey information. As you can see from the picture, the most frequently mentioned are the following: “high-risk”, “safety component”, “conformity assessment”, “intended use”, and not surprisingly — “authorities”.

How to scan through the proposal?

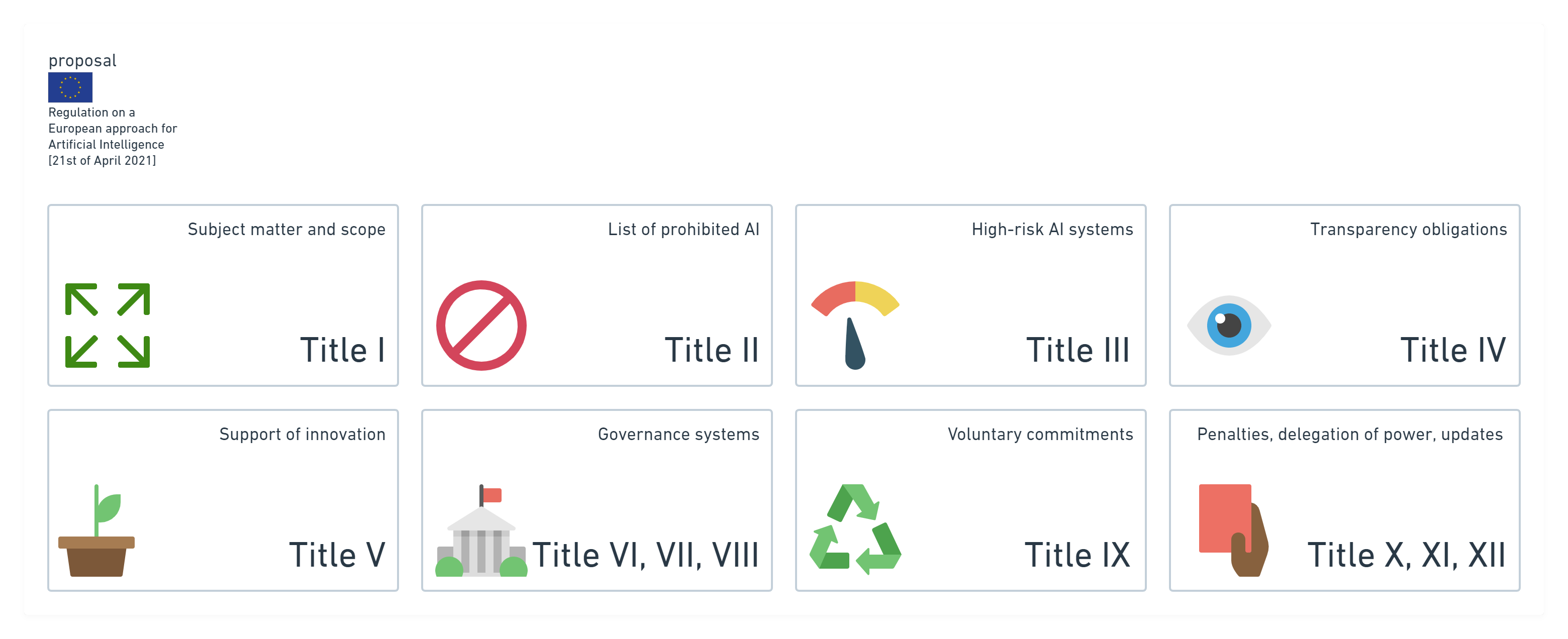

The proposed regulation consists of two documents: the proposal itself and the document with 9 Annexes.

It’s sad to acknowledge but the navigation through the proposal document deserves to be better. Inconsistent numbering, confusing structure and headings mixing up with each other don’t ease comprehension.

EU AI Act structure of the proposal

- Title I defines the scope of application of the new rules that cover the placing on the market, putting into service, and use of AI systems.

- Title II comprises all those AI systems whose use is considered unacceptable as contravening Union values, for instance by violating fundamental rights.

- Title III contains specific rules for AI systems that create a high risk. The classification of an AI system as high-risk is based on the intended purpose of the AI system, in line with existing product safety legislation.

- Title IV is about transparency obligations for systems that (i) interact with humans, (ii) are used to detect emotions or determine association with (social) categories based on biometric data, or (iii) generate or manipulate content (‘deep fakes’).

- Title V contributes to the objective to create a legal framework that is innovation-friendly.

- Title VI sets up the governance systems at Union and national levels.

- Title VII aims to facilitate the monitoring work of the Commission and national authorities.

- Title VIII sets out the monitoring and reporting obligations for providers of AI.

- Title IX concerns a framework to encourage providers of non-high-risk AI systems to apply the requirements of high-risk systems voluntarily.

- Title X sets out rules for the exchange of information obtained during the implementation of the regulation.

- Title XI sets out rules for the exercise of delegation and implementing powers.

- Title XII contains an obligation for the Commission to assess regularly the need for an update of Annex III and to prepare regular reports on the evaluation and review of the regulation.

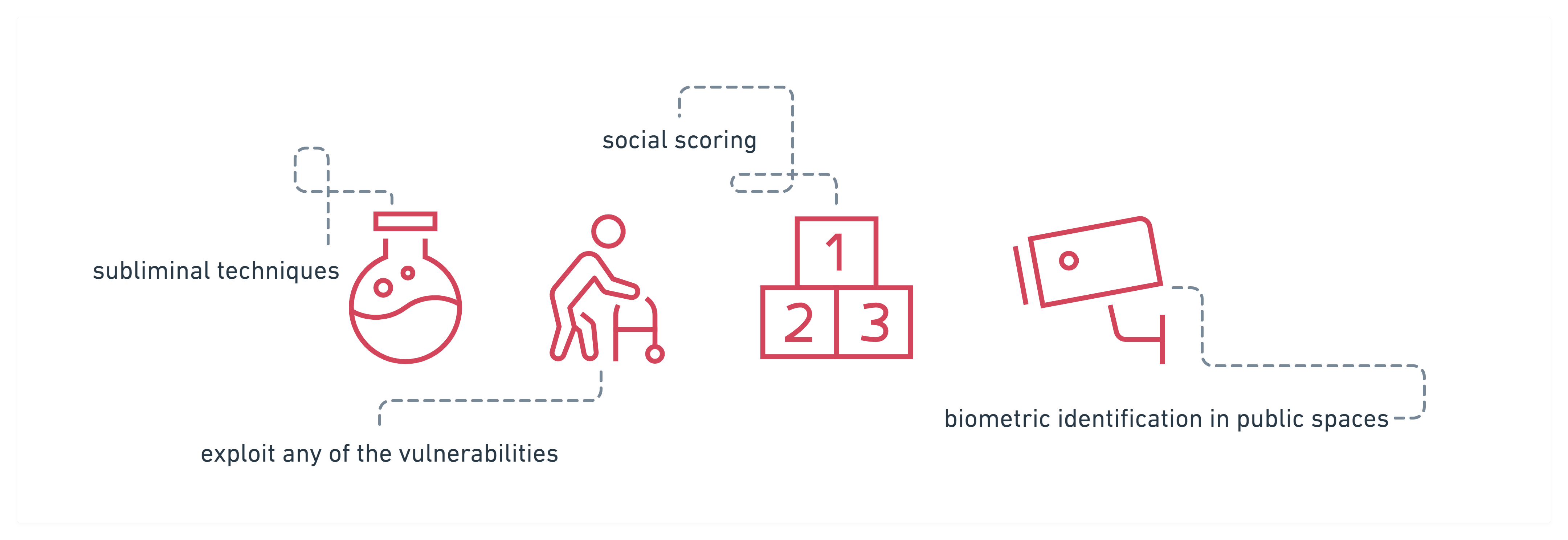

What is prohibited?

The proposal starts by outlining AI applications that are prohibited (though there are exceptions, see Article 5.3)

EU AI Act – AI applications that are prohibited

- AI that deploys subliminal techniques beyond a person’s consciousness to materially distort one’s behavior in a manner likely to cause physical or psychological harm;

- AI systems that exploit any of the vulnerabilities of a group of persons due to their age, physical or mental disability, to materially distort the behaviour or to cause harm;

- Social scoring: detrimental or unfavorable treatment of certain persons or whole groups in social contexts which are unrelated to the contexts in which the data was originally generated or collected; detrimental or unfavorable treatment of individuals/groups that is unjustified or disproportionate to their social behaviour;

- Real-time remote biometric identification systems in publicly accessible spaces for law enforcement, unless… (here we have exceptions, see Article 5(d))

What is meant by “high-risk”?

The big part of the proposal focuses on so-called “high-risk AI systems”. The term “high-risk” is not well-defined, but the Commission provides criteria (see Article 6) to help understand whether AI systems fall in this category based on existing EU product safety legislation (listed in Annex II) or systems explicitly listed in Annex III (this list to be updated annually, see Article 73).

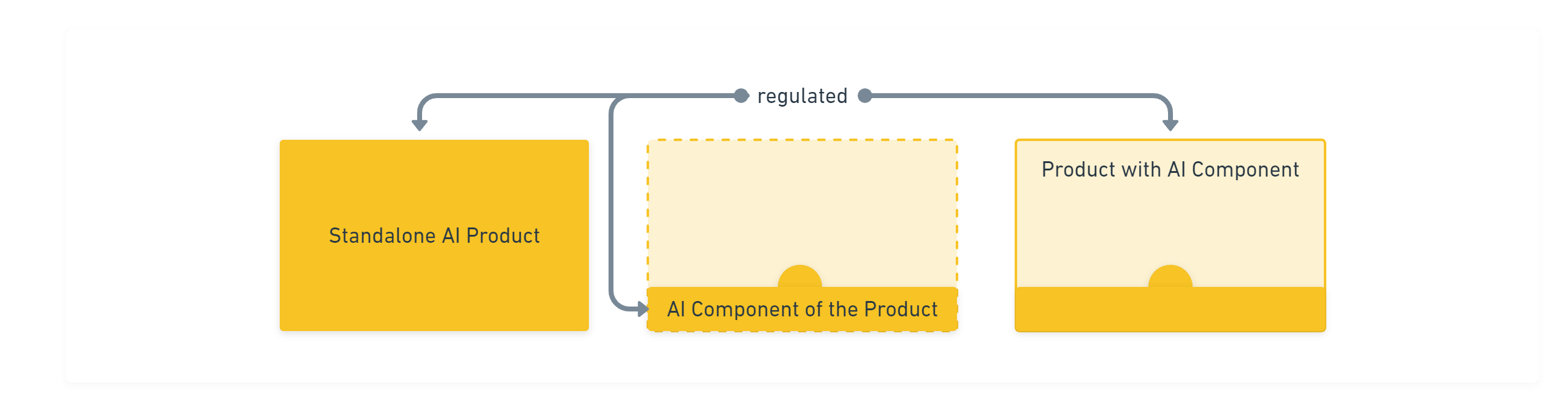

EU AI Act – scope and high-risk apps

AI system could be considered “high-risk” irrespectively of whether it is a component of another system or whether it is put into service independently as a standalone product.

- AI system is a product with intended use within Annex II

- The product with an AI system as a safety component

- AI system is a component of the product within Annex II

- AI system is a safety component of a Product

- Annex III AI System

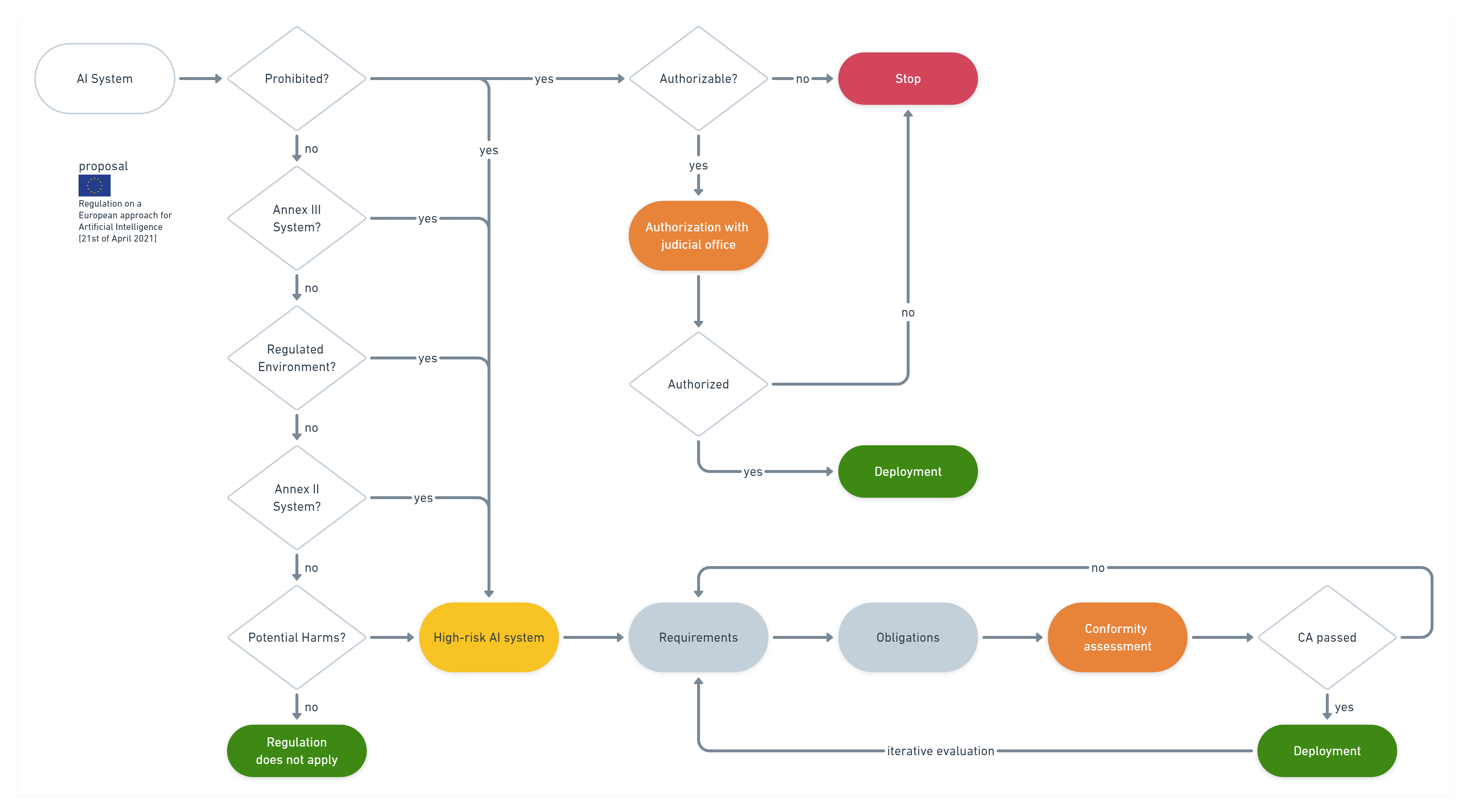

I’m developing or operating an AI system, what are my actions?

First, you need to understand whether the regulation applies to where you stand and where you want to use AI. Intended use is one of the cornerstones of this regulation and therefore you need to be perfectly aligned to how the AI product is positioned on the market or for which needs it is used when integrated as a component.

AI systems exclusively developed or used for military purposes should be excluded from the scope of this Regulation where that use falls under the exclusive remit of the Common Foreign and Security Policy regulated under Title V of the Treaty on the European Union (TEU).

Second, check requirements and obligations, including the need for conformity assessments.

I have drafted this diagram to visualize the thought-and-act process before deploying a high-risk AI system in the EU market.

Thought-and-act process before deploying a high-risk AI system in the EU market

BTW, together with Montreal AI Ethics Institute and Open Ethics, we organize a practical workshop on “Self-disclosure for an AI product”. We invite startups and AI companies to become full-armed to understand and to address the regulatory requirements.

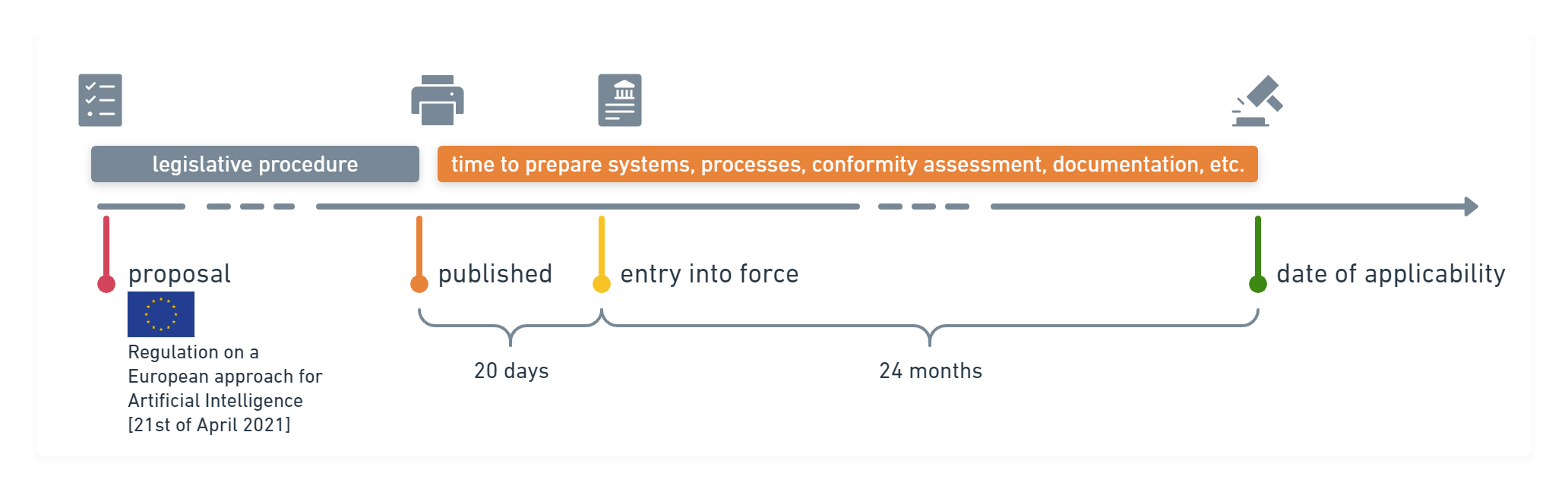

Is regulation applied already?

No. The proposal now goes to the European Parliament and the Council of Europe for further discussions and refinement. Once adopted, the regulation will come into force 20 days after its publication in the EUR-Lex Official Journal of the European Union. It will apply 24 months after that date, but some provisions from the regulation will apply sooner. From this date, it can be fully invoked by its addressees and will be fully enforceable.

EU AI Act timeline

Due to the complexity of the AI domain, a period of time may is needed between the date the regulation enters into force, i.e. it legally exists, and the date it can actually be applied, i.e. the date when it is enforceable and the legal rights and obligations can be effectively exercised.

This period of time (vacatio legis) is deliberately introduced for the Member States, competent authorities, operators, organizations, license holders, and any other addressees or beneficiaries of the regulations to prepare their systems, processes, procedures, documentation, etc. for compliance with the new rules. As remarked by Jetty Tielmans, This long “grace period” increases the risk that, despite the efforts from the EC to make the regulation future-proof, some of its provisions will be overtaken by technological developments before they even apply.

Sources

Proposal of EU AI Act — https://ec.europa.eu/newsroom/dae/document.cfm?doc_id=75788

Annexes — https://ec.europa.eu/newsroom/dae/document.cfm?doc_id=75789

Photo by Marius Oprea on Unsplash