Why is Transparency the Key to AI Compliance under the EU AI Act?

The EU AI Act makes transparency central to compliance, requiring companies to disclose key AI system information. Beyond regulation, transparency builds trust, drives innovation, and creates a competitive advantage. This text highlights why it matters, what to disclose, and how to start adopting transparency measures in your company.

Transparency under the EU AI Act: What should you know?

Transparency, as outlined in the regulation, requires companies to provide clear information about training data, algorithms used, and decision-making processes, aiming to mitigate risks and protect fundamental rights.

To determine the level of transparency required by the regulation for each type of system, it is important to assess the risk attributed to the system on a case-by-case basis. This is because, within the scope of the European Artificial Intelligence Regulation (AI Act), AI systems are classified based on the level of risk they present. Thus, to determine what level of transparency your company needs to adopt, you must identify which category your system falls into minimal risk, limited risk, or high risk.

Building and deploying mission-critical AI models is a staged and iterative process that requires refinements on each stage – before and after a system starts facing a real world. Decision approaches should be challenged on training and inference. What does that mean exactly?

Let’s briefly analyze each category to get a clearer idea of the “transparency” required by the EU AI Act:

Minimal-Risk Systems

These include most AI systems that present low or negligible risks. For instance, music or movie recommendation systems and automatic writing tools on email platforms. These systems are not subject to specific obligations under the AI Act but remain regulated by other applicable laws, such as the GDPR.

Limited-Risk Systems

Systems considered as “limited risk” are subject to specific transparency obligations. For example, when using chatbots or content-generating AI systems, it is mandatory to clearly inform users about the artificial nature of the interaction or content. Additionally, the content generated or modified with the help of AI—images, audio, or video files (e.g., deepfakes)—must be clearly labeled as AI-generated so that users are aware of it.

For limited-risk AI systems, the main obligations focus on transparency and clear information to users about the artificial nature of interactions or generated content. This involves disclosing that the content was generated by AI; designing the model to prevent it from generating illegal content and publishing summaries of copyrighted data used for training.

The High-Risk Systems

This is our Achilles’ heel under the EU AI Act. These systems are classified as “high risk” because mistakes or biases in their design or operation could cause harm to individuals’ rights, such as discrimination, loss of opportunities, or even threats to safety.

Examples of high-risk AI systems include AI tools used to screen job applications or make hiring decisions; AI systems used to diagnose diseases or recommend treatments; AIs used to grade exams or assess, AI systems for autonomous cars, among others.

Under the EU AI Act, companies managing high-risk AI systems must adhere to specific transparency obligations. These include clearly identifying the provider and their authorized representative, if applicable, and specifying the system’s purpose, accuracy, robustness, cybersecurity measures, and associated risks.

Companies must also explain how the AI generates its outputs, particularly if its behavior varies across demographic groups. Information about the data used for training, testing, and validation must align with the system’s intended purpose. Human oversight mechanisms are required to enable understanding and intervention in the system’s decisions.

Additionally, operational needs such as computational resources, maintenance, and updates must be documented, along with logging and auditing tools to ensure compliance and facilitate investigations, if needed.

Transparency and Personal Data: Building Trust with users

Photo credit: @Canva

We have an additional element in this debate that is very concerning and necessary: the processing of personal data by AI systems.

In the context of AI, transparency is especially critical due to the intensive use of Big Data and large-scale personal data processing, often generating complex inferences that can identify individuals or social groups. These inferences, such as recommendations based on previously collected preferences or sensitive automated decisions (e.g., credit or health eligibility), pose significant ethical and regulatory challenges for companies.

We can observe the production of AI inferences not only in recommendations made by the algorithm based on previously collected preferences or profile analysis but also in other curious situations. Let’s look at some famous examples from the past. In 2018, the media reported that a new patent for Amazon’s virtual assistant “Alexa” could detect when a consumer was sick and suggest remedies based on the user’s tone of voice and other signals during a conversation with the assistant.

A more controversial case ended up in Canadian courts after a consumer complaint. The startup “We Vibe,” a provider of vibrators, collected personal data on the usage of its products, including highly sensitive information such as days of use, duration, speed, pulse, and preferred settings, among others, making various inferences about its consumers based on this data processing, which included AI.

Another common example involves health data collection operations carried out by hospitals, clinics, or laboratories. Initially, such data might be collected for the purpose of performing a service, such as a lab test. However, depending on the inferences made from this data, other processing purposes distinct from the initial ones can be identified. This happens because AI can learn something new from inferences generated during data processing.

This scenario highlights the need to align entire operations involving data and systems with the ethical use of AI. As provided in the GDPR and the AI Act, transparency is not only a regulatory requirement but also a foundation for ethical practices in AI use.

The Great Dilemma: Trade Secret vs. Transparency

The EU AI Act also recognizes the importance of protecting trade secrets and proprietary information of companies, balancing the obligation of transparency with the right of companies to safeguard their most sensitive and confidential information.– Ana de Alancar

Under the EU AI Act, high-risk AI systems have rigorous transparency and compliance obligations that are incompatible with the total concealment of information under the pretext of “trade secrets.” However, there are ways to balance the protection of trade secrets with the fulfillment of legal obligations.

For high-risk systems, companies are required to ensure technical transparency by explaining the general parameters of the system’s logic and providing detailed technical documentation to demonstrate compliance. They must also grant access to regulatory authorities, ensuring that information about training, testing, and validation is available for audits. Additionally, companies need to demonstrate effective risk management by documenting the origin and characteristics of the data used, as well as the processes involved in fine-tuning and validation.

Although the regulation recognizes that trade secrets must be protected, this does not exempt companies from providing essential information to stakeholders (regulators, users, and third parties affected). However, there are ways to limit the impact on the intellectual protection of organizations, including:

- Sharing only the strictly necessary information with regulators. It means that providing sensitive and deep technical information should be limited only to the competent authorities and accompanied by strict confidentiality clauses to protect proprietary details.

- Providing strictly necessary explanations depending on the risk category of the AI system. It means that the system’s logic should be described in general terms, avoiding overly specific technical details that could compromise the company’s intellectual property rights. Explanations should focus on demonstrating the system’s general functionality without exposing all the intricate details of the code and its architecture. Exceptions to this may apply if formally requested by authorities, although such cases would remain subject to debate with legal experts.

- Contractual protection should be applied, meaning that when dealing with users and third parties depending on the case, companies can limit the disclosure of detailed information through confidentiality agreements (NDAs) or confidentiality clauses in policies, and terms and conditions.

What companies should not do to protect trade secrets and intellectual property rights is refuse to comply with the obligation of transparency. Minimum transparency is mandatory. Alleging trade secrets without providing any information, explanation, or documentation can be considered non-compliance by authorities. The same applies to the omission of information about AI systems and their data processing practices. For instance, it is essential to ensure that the data use comply with the EU AI Act, GDPR (and other applicable legislation, if any), and is transparent regarding their adequacy with a focus on training set representativeness and diversity across various population groups to prevent bias and discrimination.

Companies that refuse to be transparent may face audits by regulatory bodies, fines depending on the case, and even the banning of their systems in the European Union.

Why Transparency is Non-Negotiable

Photo credit: @kiattisaklamchan – Canva

The challenge is clear: how can companies meet their legal obligations without embracing transparency? The answer is simple—they cannot. Without providing clear and accessible information to consumers and users, it becomes impossible to uphold the rights and obligations enshrined in the EU AI Act, GDPR, and other relevant regulations governing businesses in the EU. Transparency is the foundation upon which all responsible AI operations are built—it is the critical first step to operating ethically and legally in the digital age.

Moreover, transparency serves as the backbone of interpretability and explainability, two principles that are deeply embedded in both the AI Act and the GDPR.

Interpretability and explainability are the cornerstones of responsible and ethical AI use. Interpretability ensures that a company and its developers can deeply understand how an AI system operates, recognizing that some models are inherently more transparent than others. Meanwhile, explainability bridges the gap between complex algorithms and end-users, empowering consumers to grasp the reasoning behind decisions that directly impact their lives. For instance, when a job applicant is rejected by an AI-driven CV screening system, they deserve a clear, comprehensible explanation of the logic behind their disqualification.

These two principles are not just technical necessities—they are strategic imperatives. By embracing interpretability and explainability, companies go beyond mere regulatory compliance. They establish trust, enhance user confidence, and demonstrate a commitment to fairness and accountability. Organizations that prioritize these practices position themselves as ethical innovators and leaders in consumer-centered AI, building a competitive edge in an increasingly transparency-driven market.

Transparency Tools: The Open Ethics Label

To summarize, according to the EU AI Act, all companies that develop, market, or use artificial intelligence (AI) systems in the EU will be subject to transparency obligations. Therefore, companies, regardless of the risk of their AI systems, will have to comply with transparency measures, each in their own way, depending on the type of AI applications they use. In this sense, tools for adopting transparency in practice will be essential.

In this context, we, at the Open Ethics Initiative, are a global and inclusive movement dedicated to uniting citizens, legislators, engineers, and subject-matter experts to ensure the ethical and transparent design and deployment of artificial intelligence systems. By promoting self-disclosure and open standards, we aim to bridge the gap between society and technology, fostering positive societal impact and building trust in AI solutions.

We have developed the tool Open Ethics Label for the creation of transparency disclosures. Our tool emerges as a promising solution for companies wishing to align with the requirements of the EU AI Act. While the word “Label” in the name of the transparency disclosure instrument may sound as if it suggests categorization of the AI products into compliant or not, this tool acts rather like an ingredients table, offering consumers a clear breakdown of the components that make up an AI system—just like nutritional descriptions ensure transparency about what’s inside a cookie.

Taking on this same principle, the transparency disclosure allows organizations to document and communicate in a structured manner the critical aspects of their AI systems, covering the following key elements such as:

- The origin and quality of training data, to identify and mitigate biases.

- The general logic of algorithms, promoting explainability and security.

- The decision space, clarifying the possibilities and limits for the automated decisions.

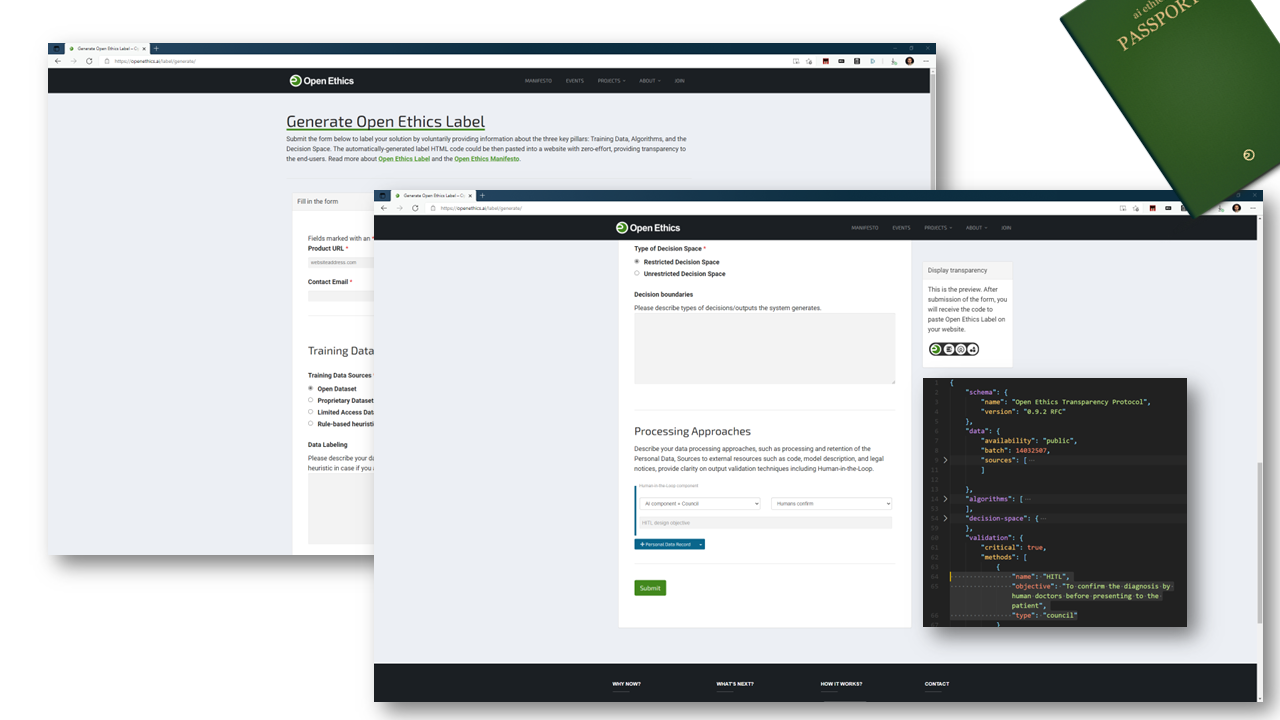

How Does the Open Ethics Label Work?

Our aim was to introduce a structured and transparent method for organizations to disclose key aspects of their AI systems. To generate the Open Ethics Label web form and complete the transparency disclosure for your product, you can start from here. Any provider and developer can fill it out and deliberately choose what to disclose, and focus on areas such as the origin and quality of training data, the general logic of the algorithms, and the boundaries of automated decision-making.

Once the form gets submitted, we receive it and add a timestamp to it. We issue a SHA3-512 cryptographic signature on the submitted data. The visual representation of the label and the machine-readable disclosure are generated in a fully-automatic way and sent out to you. You can now add the HTML code of the label on your website to display standardized information about your digital product. This label bridges the gap between technical details and user understanding, ensuring transparency while aligning with regulatory frameworks like the EU AI Act.

Balancing Transparency with Intellectual Property Protection

Balancing transparency with intellectual property protection is a central challenge for organizations. The Open Ethics Label supports this balance by encouraging internal dialogue to define clear boundaries for disclosure. This ensures that companies can comply with regulatory obligations without compromising trade secrets, tailoring their transparency practices to align with strategic goals.

As we know, the level of transparency required from each company by regulators will depend on the degree of risk associated with the AIs they develop, use, or commercialize. In any case, all companies will be subject to the transparency obligations set forth by the legislation. For this reason, we believe that measures like the Open Ethics Label, our disclosure tool, will be fundamental in supporting companies to meet transparency requirements and align with the EU AI Act.

Additionally, we at Open Ethics understand the nuances of regulatory and consumer transparency requirements and are available to advise organizations on the appropriate level of transparency for each case. The transparency disclosure also addresses this aspect in the final section of the webform, where organizations can detail their data processing methods and offer insights into their output validation techniques, including the use of Human-in-the-Loop processes.

Why This Solution Could Be Relevant for Your Organization

The tool we offer stands out because it focuses entirely on transparency. Today, as can be observed in the market, many compliance tools for the EU AI Act are still predominantly aimed at mapping or risk management of AI systems, often neglecting the issue of transparency. This poses a challenge especially for smaller companies, whose operations involving AI are less complex and therefore do not require extensive risk mapping or assessment of AI systems, as many of these companies do not use high-risk systems and are not covered under this category of the regulation.

The Open Ethics Label is a simple, free, and powerful tool for organizations looking to meet the transparency requirements set forth by the EU AI Act. It is designed with accessibility aiming to ease the use and enabling developers and deployers to disclose critical information about their AI systems.

It is important to highlight that our solution is highly adaptable to a wide range of AI applications. Whether for general-purpose systems or high-risk ones, the flexibility of the Open Ethics Label allows your organization to tailor disclosures to specific use cases, ensuring compliance in a practical and scalable way. This not only meets regulatory obligations but also creates a transparent narrative that highlights the responsibility and innovation driving your product.

But that’s not all: by incorporating sections dedicated to personal data processing and human-in-the-loop (HITL) oversight, the Open Ethics Label addresses one of today’s most pressing concerns—data transparency. Fully aligned with the principles of the GDPR and the EU AI Act, our solution also builds trust with users and regulators.

Risk mapping and assessment are essential and urgent obligations established by the EU AI Act, especially for high-risk AI systems. These activities are indispensable for identifying and mitigating potential negative impacts, such as discrimination, privacy violations, or security failures, and must be carried out by companies to ensure regulatory compliance.

However, regardless of the level of risk, transparency is a fundamental measure for all organizations, as it builds trust with users, regulators, and other stakeholders. Although we do not perform risk mapping or assessment with the Open Ethics Label, we offer a powerful and free solution to address transparency in a structured and intuitive way. This makes it an ideal complement to compliance initiatives, helping companies to highlight their ethics, responsibility, and commitment to transparent practices in the age of artificial intelligence.

Transparency: More Than Just a Legal Obligation in the EU?

Photo credit: @Canva

As we know, the EU AI Act establishes a significant focus on transparency as an essential pillar for compliance and ethics in the development and use of AI. But there is an aspect of transparency that goes beyond the legal and regulatory debate. By being transparent, companies respect their consumers’ autonomy, empowering them to make informed and responsible decisions.

That is why transparency is increasingly becoming a competitive advantage for many companies in the European Union, as they leverage it to build consumer trust and loyalty while strengthening their brand and market reputation.

Many studies show that transparency is crucial for building and maintaining a strong corporate reputation, which is essential for long-term success. And this is also the case when it comes to technologies such as AI. This is particularly true in the field of technologies like Artificial Intelligence, which often operate in ways that appear opaque and risk perpetuating the biases present in our society.

With the deadline for compliance with the EU AI Act approaching, starting the implementation of tools like Transparency Disclosure is not just a demonstration of commitment to compliance but also an essential step to ensuring trust, ethics, and innovation in AI. In addition to facilitating regulatory adaptation, this solution helps companies position themselves as leaders in the responsible use of artificial intelligence.

Transparency isn’t just about meeting regulatory requirements—it’s a powerful strategic advantage. With this in mind, we created the Open Ethics Label as a free and accessible tool to help organizations establish themselves as leaders in ethical AI. . It builds trust with key stakeholders, including investors, consumers, and the general public, by offering clear and reliable information. It also empowers users to make informed decisions which fosters trust and the organization’s reputation in the market.

Featured Photo Credits: @Canva

Comments ( 2 )