Why algorithmic transparency needs a protocol?

Consumers are confused. They are exposed to products of multiple origins each following their own path to “ethical” data processing and decision making. Is there anything we could do?

As (algorithmic) operations are becoming more complex, we realize that less and less we can continue relying on the methods of the past where Privacy Policy or ToC served (did they?) in building trust in the business. Moreover, it rarely helped any user to understand what’s going on with their data under the hood. “I agree, I understand, I accept.” — the big lies we told ourselves when clicking on the website’s cookie notice or when ticking the checkbox of one another digital platform. In the era of artificial intelligence, the privacy and cybersecurity risks remained, but now we’re observing the expansion of the risk profiles for every service to include bias and discriminatory issues. What should we do? A typical answer is a top-down regulation brought by national and cross-national entities. Countries and trade unions are now competing for AI ethics guidelines and standards. Good. What if you’re building an international business? As a business, you have to comply. Tons of digital paperwork (thanks, now it’s digital!) — and you could get settled in one single economic space. Once you’re there, there’s a chance you can move to another one by repeating the costly bureaucratic procedure. Unfortunately, this is not scalable. We call it the “cost of compliance”, and these costs are high. There is a possible way of avoiding the compliance scalability issue: disclosing the modus operandi once and matching it with existing requirements on each market. To make it possible we need a universally-accepted concept of product disclosure.

What is self-disclosure?

Digital diets and enablers of choice

Self-disclosure (or disclosure) has evolved greatly in the food industry as we got used to the food nutrition labels and nutrition tables. Proteins, fats, carbs, expiry dates, allergens, etc., are now our best friends at the shelves of a grocery store. What if we could disclose product risk in the same manner as we do for food (or electrical products, or construction materials). It’s good to have a top-down regulation and make sure that our digital “diet” is safe. At the same time, one size fits all doesn’t allow individual preferences. Self-disclosure allows individual choices which enable bottom-up regulatory mechanisms. Self-disclosure, thus, is essential for democratic societies. However, as it happened in the other industries, every vendor may decide to disclose in their own manner. Complexities of the technical language and variability in the marketing wording make it hard for consumers to navigate. Obviously, we need standards.

Why AI Ethics standards are not enough?

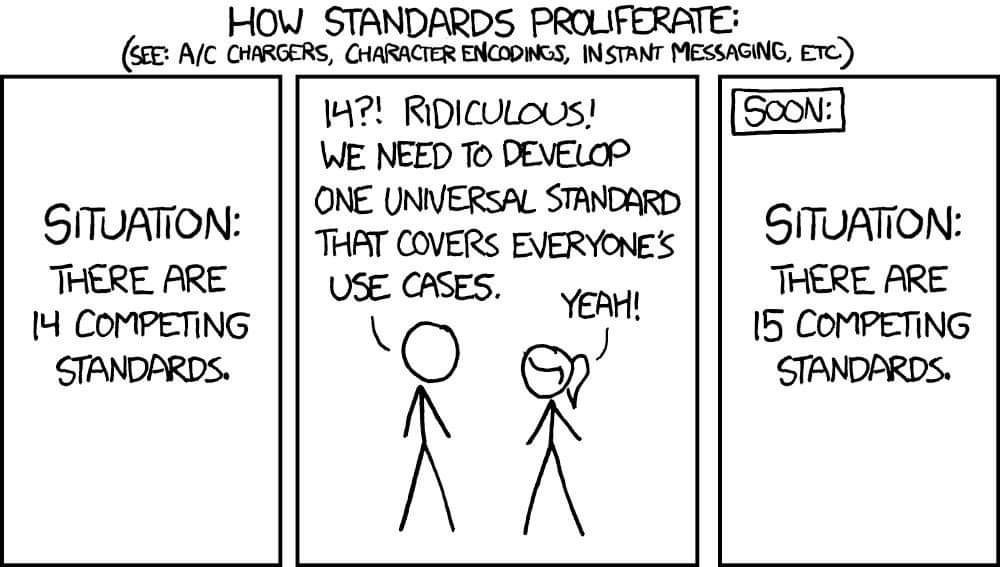

On competition of standards

Transparency is a buzzword. ISO, NIST, EC, CENELEC, EDPB, ICO, and others have their vision to which standards should digital products and services adhere. In this palette of options, not only end-users but experts get easily confused. Instead of solving the ethical issues of their products and focusing on building User-Centered products, DPOs, CIOs, CISOs, and other XXOs are forced to resolve false dilemmas. In a dynamic environment in which innovative startups (and now almost every other business) operate, flexibility is key to the survival. Moreover, consumers now are exposed to products of multiple origins each following their own path to “ethical” data processing and decision making. Is there anything we could do?

(NOT) Finding a common denominator

How to make a choice without criteria

One possible way is to agree on one single standard, specifying what vendors should do to allow calling their product “Ethical AI” (OR Trustworthy AI, OR Sustainable AI, OR Responsible AI, OR Best-in-the-universe AI). Knowing that we, as Humanity with the big H, for the last 2000 years haven’t reached an agreement on what “Ethical” even constitutes, perhaps expectations are way too high. Diversity is a good thing, after all. The choice is a good thing. In a similar way how the ordinary consumer today pushes brands to demonstrate more sustainability efforts, tomorrow the new generation of consumers will demand algorithmic transparency. And this generation will get what they demand. At Open Ethics we believe that we should make it possible for consumers to create their own digital “diet” and become selective in their risk choices.

Allowing for digital diets

A tool or a subject of decision-making

For this diet to be healthy, not only do we need top-down regulators to be empowered in limiting the harmful impacts, but consumers need to become literate in the digital risk landscape. “Literate” is not just knowing about the bad things that could happen to them when using machine-learning-powered products. “Literate” is about being skilled to distinguish true risks, disinformation, and harmful prejudices like the ones foretelling “rise of the machines” and Terminator-like scenarios. As markets are getting more mature, we need more instruments: open-source tools, canvases, common language, as well as, the ability to modulate the AI supply chain.

5 Whys for the Transparency Protocol?

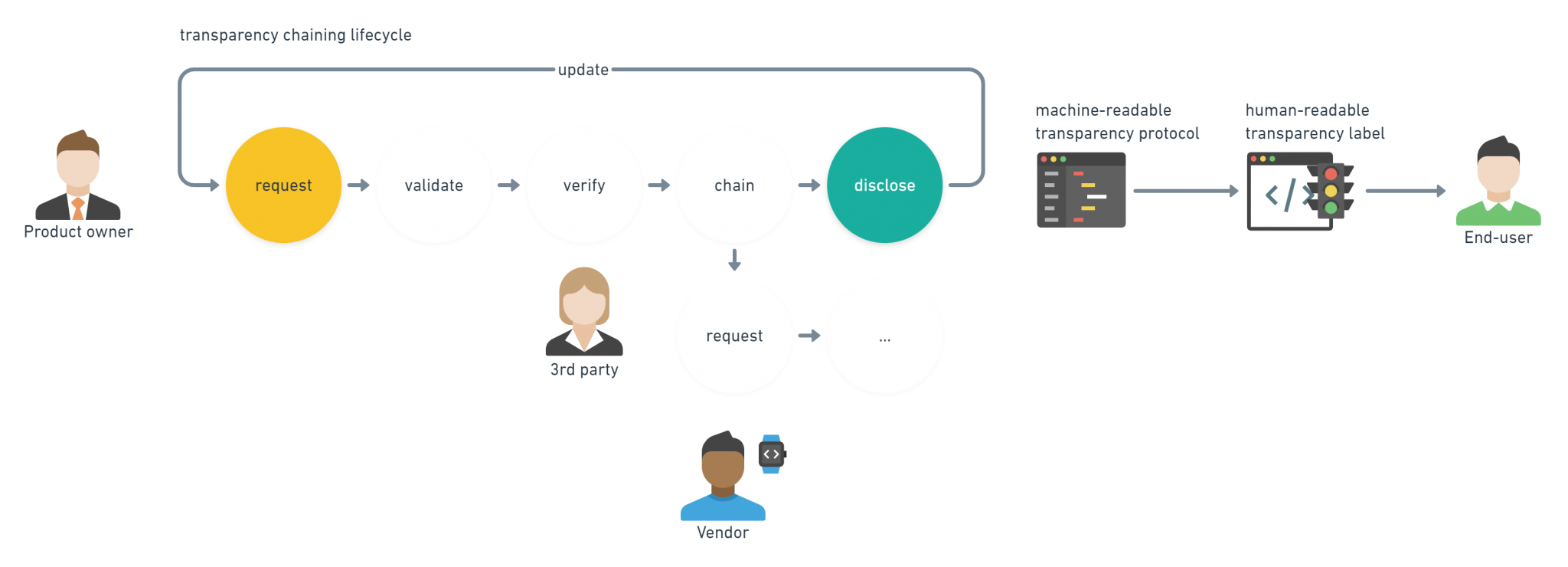

Value chain of the Transparency Protocol (OETP)

The Open Ethics Transparency Protocol (OETP) describes the creation and exchange of voluntary ethics disclosures for IT products across the supply chain. The Protocol describes how disclosures for data collection and data processing practice are formed, stored, validated, and exchanged in a standardized and open format. OETP provides facilities for:

- Informed consumer choices : End-users able to make informed choices based on their own ethical preferences and product disclosure.

- Industrial-scale monitoring : Discovery of best and worst practices within market verticals, technology stacks, and product value offerings.

- Legally-agnostic guidelines : Suggestions for developers and product-owners, formulated in factual language, which are legally-agnostic and could be easily transformed into product requirements and safeguards.

- Iterative improvement : Digital products, specifically, the ones powered by artificial intelligence could receive nearly real-time feedback on how their performance and ethical posture could be improved to cover security, privacy, diversity, fairness, power balance, non-discrimination, and other requirements.

- Labeling and certification : Mapping to existing and future regulatory initiatives and standards.

Why supply chain is key?

When any industry is getting mature, higher profit is typically achieved through improving operational efficiencies to decrease costs. As the IT industry is getting there, large product and service companies are outsourcing non-core elements of their business to suppliers. In the IT world outsourcing means not only developing code but largely shifting towards third-party data processing. With more complex supply chains, transparency on the surface level is not enough anymore. As we discussed earlier, costs of compliance are high. Unlike small players, the big companies can bear with its low fraction in the cost structure. This is what indirectly makes compliance a friend of oligopoly, SMEs are hard hit. To address this issue Open Ethics has chosen to work with Internet Engineering Task Force groups and to bring the Transparency Protocol as an open solution to increase the transparency of how IT products are built and deployed across the complex chains. We’re now working on bringing the next iteration to advance the future of transparency and inviting everyone to learn more and to contribute to this open-source effort.

Featured image adopted from Photo by Eric Bruton on Unsplash